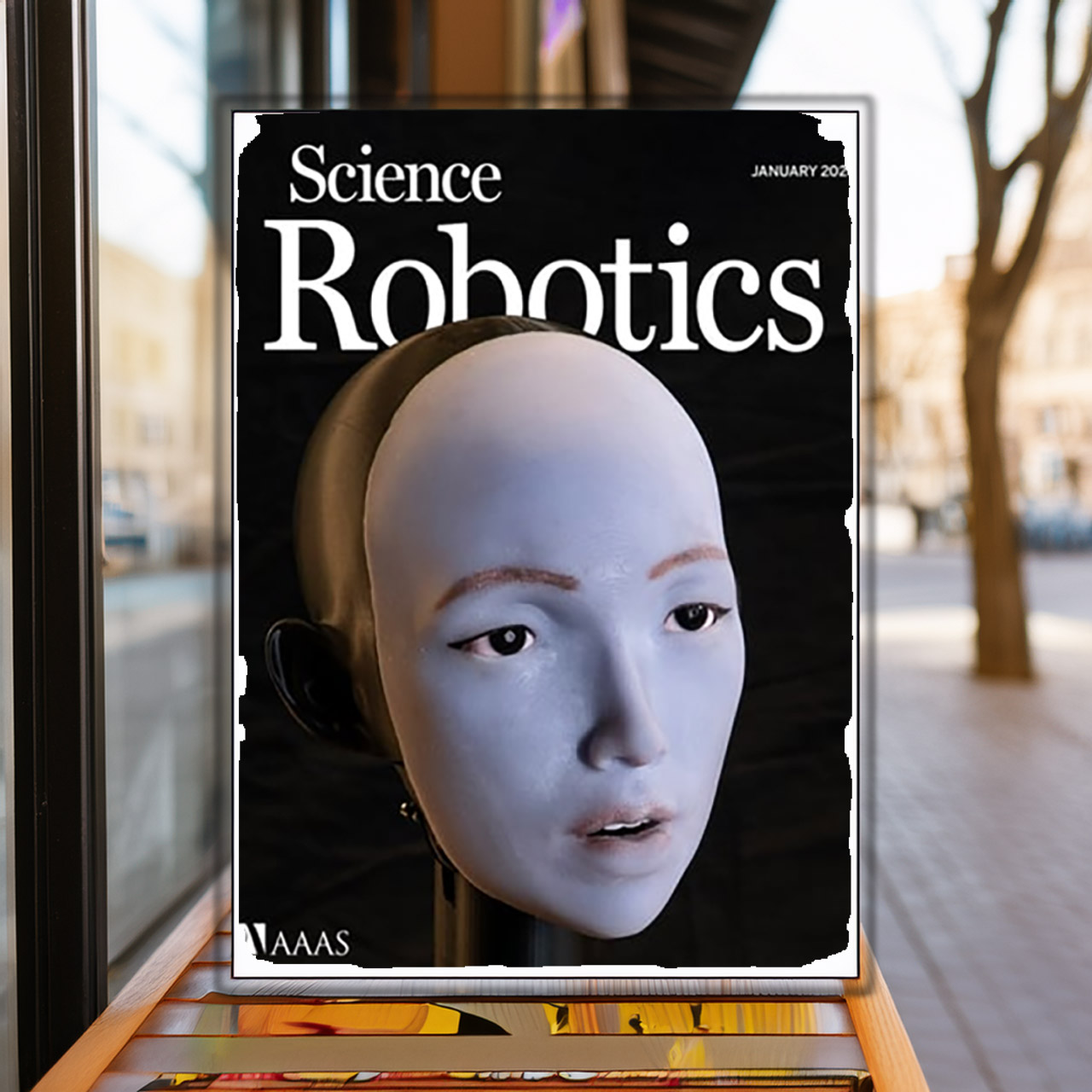

Columbia University Engineers Unveil Emo, a Lip-Syncing Robot with Human Facial Gestures

A robotic head named Emo sits peacefully until you hear a sound, at which point its lips begin to move, producing sentences with impressive accuracy. This system was developed by a team of Columbia University researchers, and the results were published in Science Robotics just a few days ago.

The engineers who created Emo gave it a flexible, silicone skin stretched over a frame, and behind that skin are 26 tiny motors, each of which can pull or push in extremely precise ways, allowing the face to mold vowels, consonants, and even the stretch of a sung note. These motors can move quickly and silently, which is why the facial movements appear smooth and not jerky.

Unitree G1 Humanoid Robot(No Secondary Development)

- Height, width and thickness (standing): 1270x450x200mm Height, width and thickness (folded): 690x450x300mm Weight with battery: approx. 35kg

- Total freedom (joint motor): 23 Freedom of one leg: 6 Waist Freedom: 1 Freedom of one arm: 5

- Maximum knee torque: 90N.m Maximum arm load: 2kg Calf + thigh length: 0.6m Arm arm span: approx. 0.45m Extra large joint movement space Lumbar Z-axis…

Yuhang Hu led the project as part of his PhD, working in Hod Lipson’s Creative Machines lab. Rather than programming the robot with a slew of hard-coded rules for every single sound, the Emo team took a different approach: Emo learned by observation. First, the robot was positioned in front of a mirror. It was then programmed to display thousands of random expressions while monitoring how its own motors affected the reflection in the mirror. That was the first step toward Emo understanding the direct relationship between what it was ordered to do and what it actually achieved, which was accomplished through some self-supervised practice.

Then came hours and hours of YouTube videos, where Emo learned to watch people talk in a variety of languages and sing songs. The AI recognized the link between the audio waveforms and the mouth shapes in each frame, therefore no word translation was required. The algorithm was mapping sound directly to motion, thus Emo could handle French, Arabic, and Chinese properly without any prior training in those languages.

When the team put Emo to the test, it proved to be quite excellent at articulating intelligible words in a variety of circumstances. Emo even got to perform a track from an album called “hello world_,” which was generated by an AI, and it picked up the rhythm changes and held the vowels in singing pretty well, though there’s still some room for improvement, especially when it comes to sounds like hard stops or the “B” sound, and puckered shapes for “W,” which are still pretty tricky.

Human faces are made up of dozens of muscles that are all firmly coupled with the voice cords, whereas most robot heads have fewer actuators and follow a set of pre-programmed patterns, resulting in stiff and unnatural movements. However, Emo successfully avoids all of these constraints by using a large number of motors, a flexible covering, and a learning method that mirrors real-world dynamics.

Lip motion captures over half of our attention during conversation, and when it doesn’t match what’s being said, even the most advanced robot designs might feel odd. Emo goes a long way toward bridging that gap, and when combined with a language model, it develops a far greater knowledge of how to detect tone and context as a discussion progresses.

[Source]

Columbia University Engineers Unveil Emo, a Lip-Syncing Robot with Human Facial Gestures

#Columbia #University #Engineers #Unveil #Emo #LipSyncing #Robot #Human #Facial #Gestures