Disney Researchers Train Robots to Land Like Stars When They Trip and Fall

Falling has long been a major issue in robotics, and engineers have been working for years to keep their creations upright, often transforming twitchy bipedal walkers into virtual acrobats capable of navigating all types of uneven terrain or avoiding obstacles with eerie mechanical poise. But when gravity ultimately gets the best of them, those same robots collapse to the ground like a pile of discarded garbage, their joints locking up in a sort of robotic horror or flailing madly until something breaks or bends.

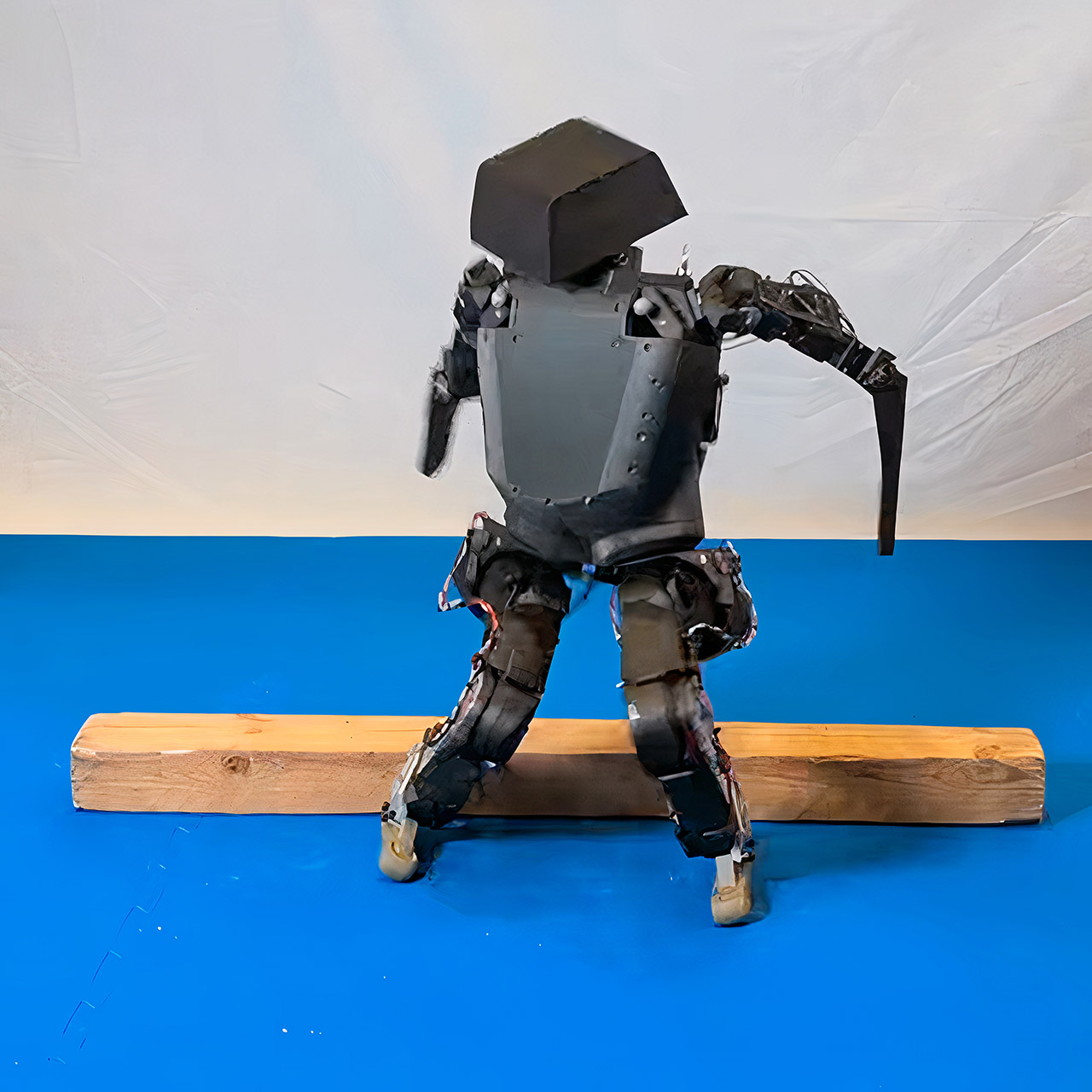

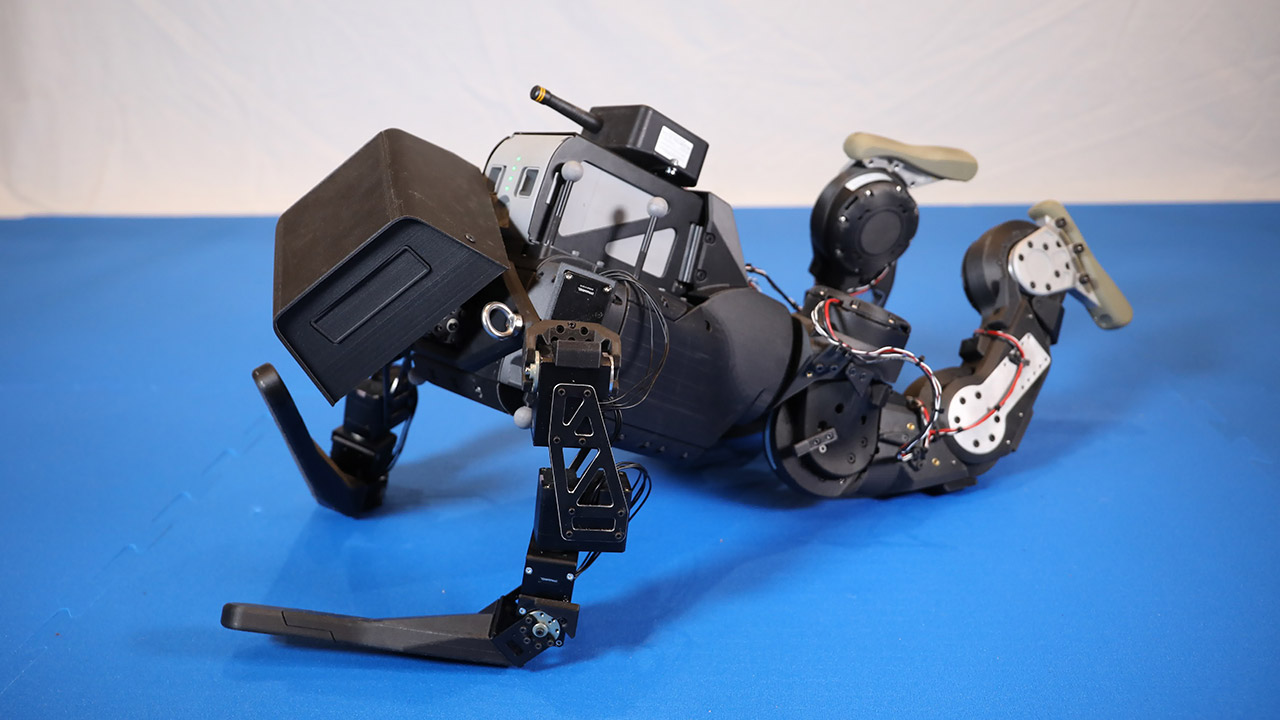

Damage reports from laboratory and warehouse floors offer a grim picture: all those cracked casings, shattered sensors, and maintenance expenditures are piling up faster than the scrap heap can process. But Disney Research, the company behind all of the theme park animatronics and droids that appear so lifelike in Star Wars attractions, has decided to adopt a completely different approach. Instead of resisting the falling object, they’ve devised a means to simply roll with it, transforming the tumble into something almost, sort of… controlled and even downright impressive. And the end result is a system that allows a robot to simply descend from a shove or slide and land in whichever position it wants, all while cushioning the impact to keep all of the pricey parts from being crushed.

LEGO Disney & Pixar Wall-E & EVE Building Set for Adults, Ages 18+ – Home Office, Book Shelf, or Room…

- LEGO SET FOR ADULTS – The WALL-E and EVE (43279) building set offers adults 18 years old and up an immersive construction challenge featuring…

- 4 DISNEY PIXAR CHARACTERS – Builders can create iconic robots WALL-E, EVE, M-O and Hal from the hit movie—each with authentic functionality like…

- MINDFUL BUILDING EXPERIENCE – This detailed construction set lets builders practice advanced construction techniques for an immersive and relaxing…

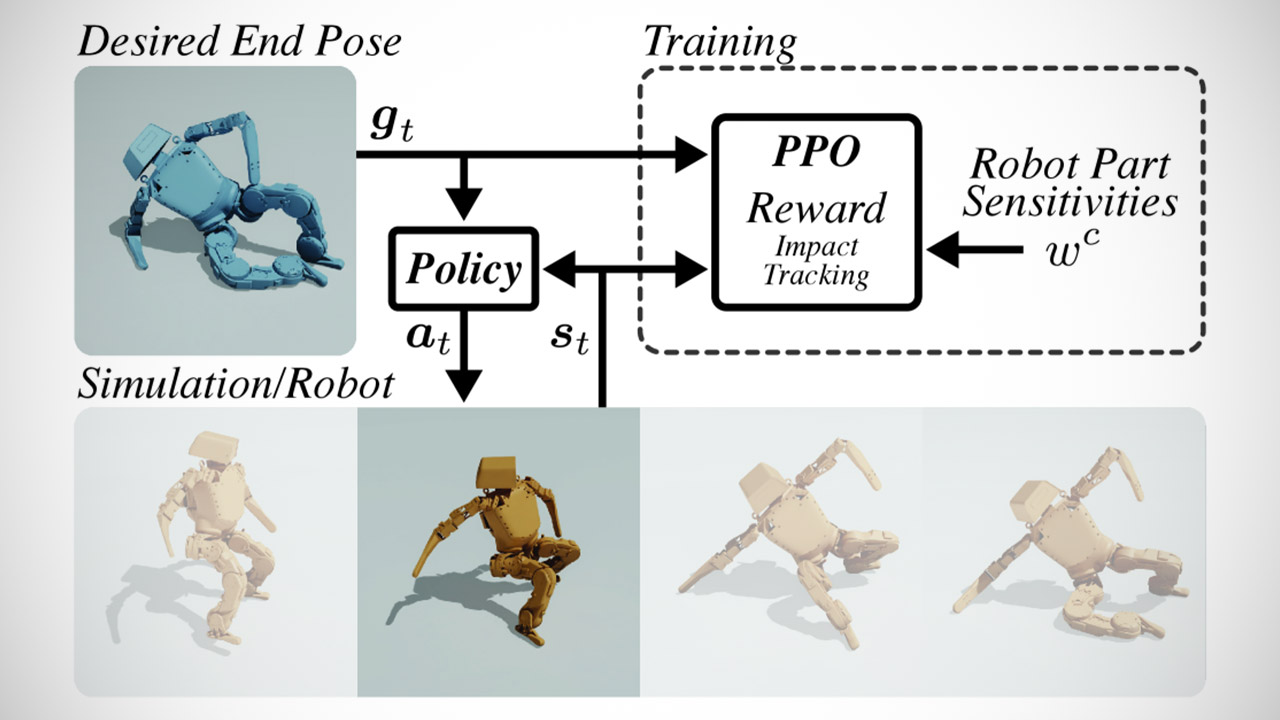

Recently, several Disney experts collaborated with engineers from the University of California and Boston Dynamics graduates to put this concept into action. They employed a combination of reinforcement learning, which entails the robot performing a zillion virtual tumbles in a computer simulator and then learning from each one what works and what does not. On paper, the goal was straightforward: begin with that mid-air flailing, attempt to bounce it in such a way that you don’t turn the poor robot into a heap of junk, and then settle into some type of final stance that either protects all the delicate sections or looks extremely dramatic.

To pull it all off, the researchers devised a scoring system that activates as soon as the robot begins to fall. Every joint twist and limb extension is assigned points or penalties depending on a few critical criteria. If the robot can lessen the force of the hit, particularly on all sensitive parts such as the head or battery pack, it will score highly. However, if it becomes jerky or veers off course and begins flailing around like a crazy, the points are deducted. The robot initially focuses on smoothing out the landing, but as it approaches the ground, it begins to try to bend itself into the desired stance.

The entire process required a lot of preparation: they put up thousands of starting conditions in the simulator, attempting to cover all of the potential worst-case possibilities, from a wobbly stumble at 2 meters per second laterally to a crazy pitched forward motion with rotating hips. Each episode begins with the robot’s core out of sync, with velocities randomized to prevent it from ending up in an impossible position. And for the final combinations, they came up with 24,000 stable locations and just dropped them from waist height; physics took care of the rest.

This avoided the common trap of depending primarily on belly flops and back slams by combining a broader range of motions, from gently belly up to protective side rolls. A total of ten of those final configurations were created by skilled artists using 3D software, resulting in creative stances such as a defensive crouch or a dramatic flop that appeared to channel the stage presence of actors, all while keeping a close eye on the robot’s physical constraints. Then there was the issue of random noise sneaking into the simulations, tiny little pushes that would nudge an elbow or foot, all as part of a tactic to strengthen the policy against the unanticipated oddities of real-world hardware.

Training lasted two days on powerful graphics cards, during which 4,000 digital robots tumbled down at the same time. The policy itself was a modest neural network with only a few layers that processed data from joint angles, speeds, and body posture while spitting out motor orders at a rate of 50 times per second. The technique was driven by a steady process known as proximal policy optimisation, which gradually modifies the robot’s behavior without pushing it too far or too quickly.

Of course, to minimize simulation hang-ups, contact pressures were kept to a minimum, and sensitivity levels were adjusted to fit the various sections of the robot, so the legs were nice and delicate, but the head needed to be treated with a little more care. By the end, the robot had gained enough expertise to handle unanticipated errors with ease, flawlessly transitioning from a default sprawl to a precisely constructed curl in the blink of an eye. All of this was good enough to get the policy loaded into the real thing, a 16kg metal biped with springy legs and Dynamixel arms, which was connected to a motion-capture rig that watched every wobble and sent it all back around the loop to keep those pixels talking to the pistons.

Disney Researchers Train Robots to Land Like Stars When They Trip and Fall

#Disney #Researchers #Train #Robots #Land #Stars #Trip #Fall